Target Classification by mmWave FMCW Radars Using Machine Learning on Range-Angle Images

Target classification and object detection are essential features of applications like surveillance and autonomous navigation. The detection systems train their learning models by extracting recognisable features from an image or video. The performance of detection models can deteriorate significantly in images captured in extreme weather and under inadequate lighting conditions.

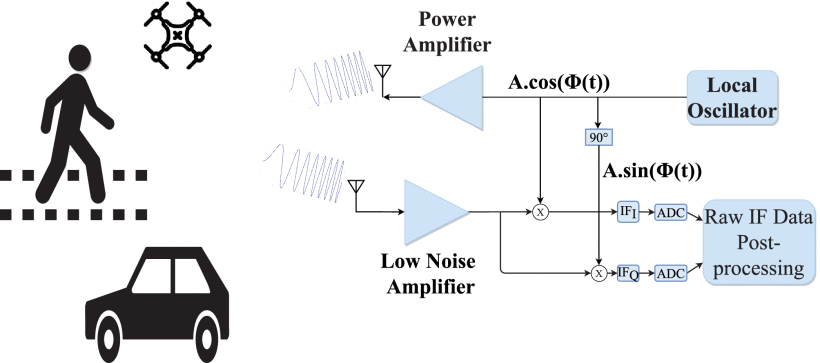

A millimeter-wave radar based on the frequency-modulated continuous wave (FMCW) principle is a compact, energy-efficient, high-resolution radar system. The mmWave radars use the Doppler effect to measure parameters like distance, angle of arrival (AoA), and velocity of the moving object in their direct field of view (FoV). Radars using mmWave FMCW technology perform better in adverse weather than vision sensors. These radars, however, are incapable of distinguishing stationary targets or providing object outline characteristics.

The researchers proposed a novel mm-wave FMCW radar detection system by combining radar imaging and advanced deep-learning vision models. The proposed detection system is robust and accurately predicts the object’s characteristics in multiple scenarios.

The limitation of transceivers in radar system leads to a restricted field of view. Increasing the number of transceiver antennas to expand the FoV leads to complexity, computational latency, and cost. The proposed model has the radar antennas oriented in the elevation direction while a mechanical rotor rotates them in the horizontal direction. The elevation orientation increases the elevation FoV, and horizontal rotation covers the azimuth FoV.

The prototype model has a Texas Instruments (TI) mmWave radar, a baseband mixer, an analog-to-digital converter (ADC), and a phase-locked loop (PLL). The radar operates in a frequency range of 77–81 GHz with a bandwidth of 4 GHz. The radar setup mounted on a battery-powered, portable, and highly programmable rotor can cover 180 degrees span.

In the proposed approach, the mmWave radar data is transformed into range-angle heat maps of the imaging area, and the objects are labelled with their respective classes. These heatmaps and an object detection technique based on a deep convolutional neural network are used to accurately classify multi-class objects like humans, cars, and aerial vehicles like drones. The raw IF data collected from the mm-wave radar is post-processed in MATLAB.

You Only Look Once (Yolo) is a convolutional neural network (CNN) model for real-time object classification in captured images. The objects in images are marked before being fed into the Yolo algorithm. The software creates a bounding box around each object in the range-angle map. These distinguishable bounding boxes help define different targets.

The proposed model was used to detect and classify humans, drones, and cars. The model could achieve 87–99% accuracy in predicting the three classes from multi-target, customised range-angle images.

The model achieved a high level of predictability about the object’s characteristics with sparse targets. But when targets move into close contact, the bounding boxes in the range-angle map may overlap, degrading the overall resolution. Further research is required to fuse the data from additional sensors with mm-wave radar data to improve the resolution and predictability.