Swin-Depth: Using Transformers and Multi-Scale Fusion for Monocular-Based Depth Estimation

Depth information and estimation have broad application prospects in fields like robot navigation, autonomous devices like quadrotor drones, and even autonomous driving. In automated applications like uncrewed vehicles and robotics, depth estimation is crucial for perception and navigation. A computer vision system extracts depth from a monocular or single RGB (Red, Green, Blue) image.

Researchers have extensively worked on monocular depth estimation based on convolutional neural networks (CNN). The inherent convolution operation of CNN has limitations when modeling large-scale dependence. Another data-driven approach to monocular depth estimation uses transformers instead of convolutional neural networks.

Transformers have shown high accuracy in natural language processing and machine translation. Researchers have been studying its usability in the field of computer vision. However, the method suffers from high calculation complexity and excessive parameters.

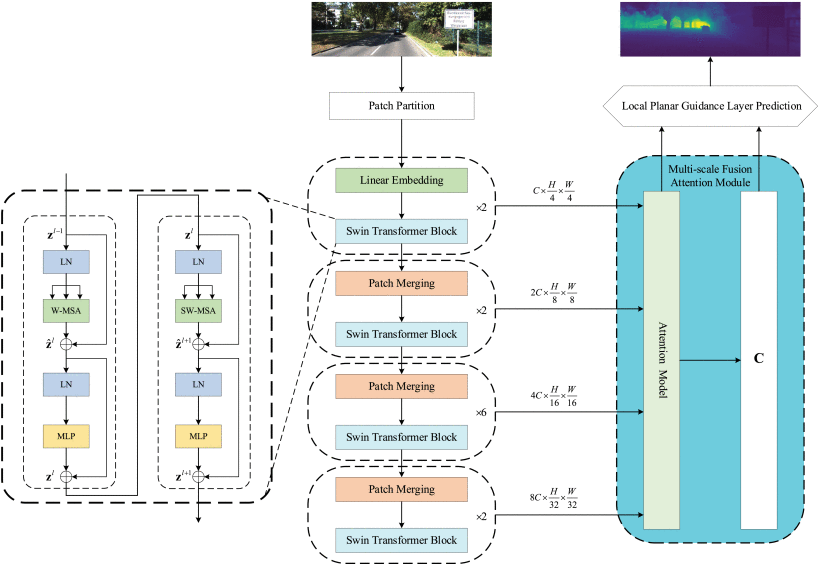

A novel monocular depth estimation method, Swin-Depth, is proposed for continuous label images in a limited receptive field. Swin, or Shifted Windows Transformer, is hierarchical representation learning with linear complexity for images. The proposed Swin-Depth system consists of a backbone network based on the Swin-Transformer.

The prototype uses hierarchical representation learning in the front-end network and a CNN-based framework in the backend to form an encoder-decoder architecture. Encoders use shifting windows to calculate representations using hierarchical networks.

For self-attention calculations, non-overlapping local windows are used for local modeling, while cross-window connections capture global information. The proposed approach reduced the excessive parameters and improved computational efficiency in monocular depth estimation.

A novel lightweight multi-scale fusion attention module added to the system enhances the ability to capture global information after hierarchical representation learning. The attention module selectively enhances the prominent parts of the image while diluting the unnecessary ones.

A patch partition module cuts the RGB image into patches without overlapping. Each patch represents the corresponding features of the respective part of the original image. A Swin-transformer then processes these image patches in four stages. Each stage downsamples the patch, adjusting the channel dimensions and reducing the resolution to produce a hierarchical representation. The output at each stage enters the multi-scale fusion attention module, and the features of different scales are input to the attention module for subsequent processing. The result is then fed into the back end for monocular depth estimation.

The study tested the proposed Swin-Depth on different challenging datasets containing outdoor and indoor scenes. In the monocular depth estimation experiment of the outdoor dataset KITTI, the method reduces the Sq Rel to 0.232 and the RMSE to 2.625 and improves the accuracy. Similarly, in the experiment on the indoor dataset NYU, the method reduces the Abs Rel to 0.100 and improves the accuracy. At the same time, the number of parameters is effectively reduced. It showed excellent performance compared to the existing state-of-the-art methods.

The study demonstrated that the module effectively enhances the network's ability to learn global representations through ablation study. In addition, the parameter set is effectively reduced, thus reducing the number of parameters for the system. The prototype provides a data-driven, more accurate, and efficient solution for the monocular sensors to solve the depth estimation problem.