Smart Healthcare: RL-Based Task Offloading Scheme for Edge-Enable Sensor Networks

Smart healthcare systems provide efficient, personalized, and convenient healthcare through real-time monitoring and analysis. They rely on innovative technologies like the Internet of Medical Things (IoMT), cloud computing, edge computing, and artificial intelligence.

The conventional cloud-computing system routinely collects and transmits dynamic data between one central processing utility (cloud) and end-user gadgets. The ever-growing size of real-time data has thrown up challenges like system latency, energy inefficiency, low bandwidth, and connectivity.

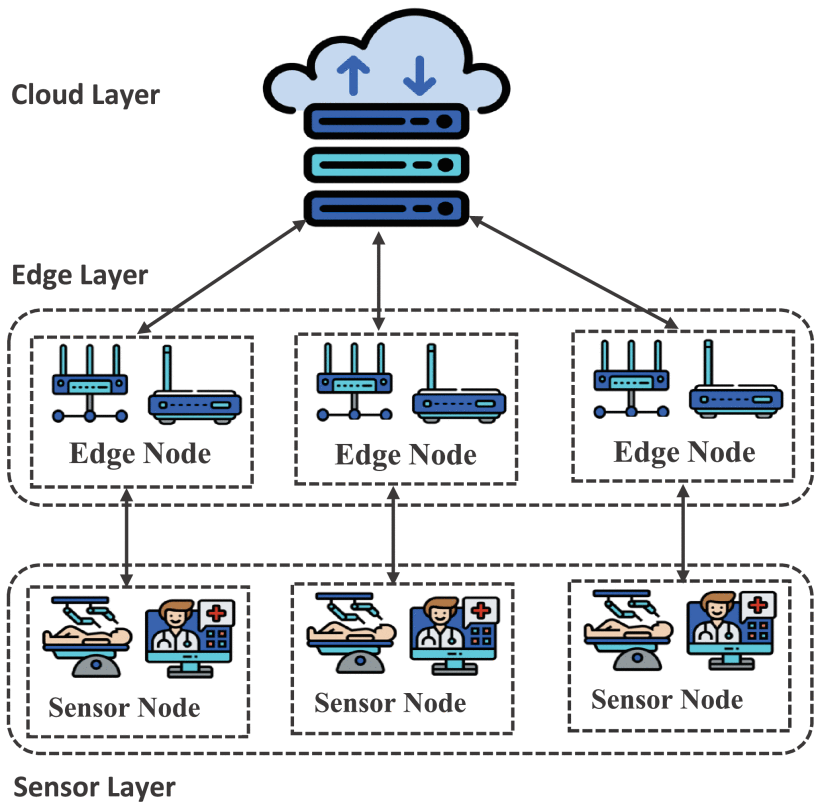

Edge or Fog-computing provides a decentralized, hierarchical computing infrastructure as a bridge between the cloud-computing core and end-user application. The IoMT devices and sensor Nodes at the lower layer are excellent sensors with limited resources. The Edge Nodes in the middle layer are arranged hierarchically, their processing and storage capabilities increasing from bottom to top. The top layer has cloud servers consisting of heterogeneous resources.

When the sensor nodes at the local level get overloaded during peak time, an algorithm offloads the tasks to a set of preselected edge nodes at a higher level.

An efficient scheme, Computation Offloading using Reinforcement Learning (CORL), is presented here for jointly minimizing latency and energy consumption in an edge-enabled sensor network environment. A Reinforcement Learning (RL) algorithm is typically composed of two alternating events: task generated and action taken. The algorithm learns through a trial-error process to improve its performance.

In the present CORL scheme, the algorithm allots it to a processor node based on a pre-defined strategy when a task is generated. The algorithm records the system state, i.e., resource capabilities like available battery energy, capacity, processor capacity and bandwidth of the nodes, and the resource requirement of the task. A reward or penalty is recorded per the outcome, completion, part completion, or failure of the action.

After analyzing the reward (or penalty), the algorithm redefines the allotment strategy. This process, called the Markov Dynamic Process (MDP), is repeated multiple times until maximum optimization is achieved.

The optimum state of each edge node is stored in a Q-value table at the cloud level. The sensor nodes explore these Q-table values while deciding to offload the new task to the most efficient edge node or cloud level. The Q-value table is updated constantly. The proposed algorithm can achieve low latency and reduced energy consumption over time.

An edge-computing environment with a cloud server, 10 edge nodes, and approximately 40 sensor nodes was simulated to evaluate the scheme on performance parameters like energy consumption, latency, and offloading time. Any particular task processing was discarded after the maximum latency limit was violated. The evaluation was carried out till all sensor nodes’ tasks were executed.

Compared to other baseline policies like Random, Cloud-Edge, and Cloud, the CORL scheme consistently achieved minimum energy consumption. Total latency and computational cost increased with the increase of computation intensity. The proposed scheme has demonstrated effective and efficient minimization of the total latency and energy consumption compared to other schemes.