Human Activity Recognition With Smartphone and Wearable Sensors Using Deep Learning Techniques: A Review

The objective of HAR system is to accurately identify and monitor activities of a person in real-life settings by extracting features from data provided by various sensors. In last decade, Human Activity Recognition Systems or HAR has seen extensive research interest because of its usefulness in areas such as Ambient Assisted Living (AAL), sports injury detection, well-being management, medical diagnosis and elderly care etc.

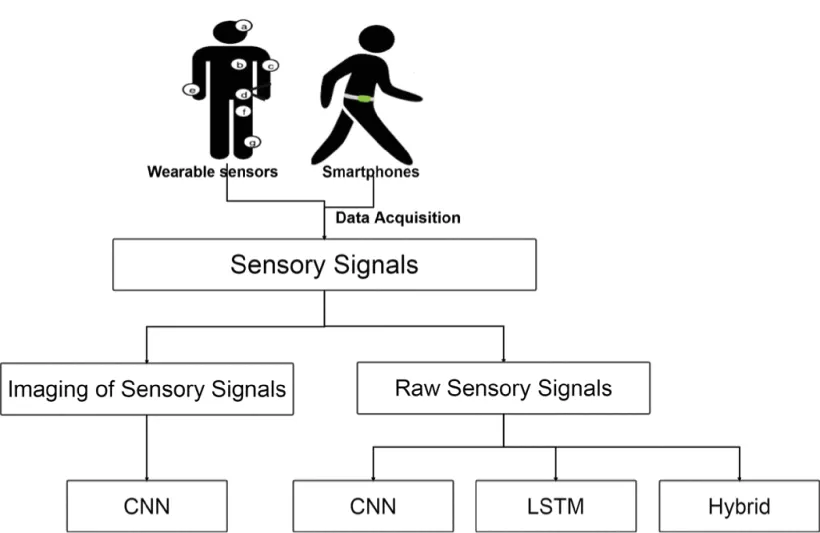

The emergence of smartphones and smart-wearable has considerably enhanced the scope of improvement and accuracy in HAR systems. The smart devices have various integrated/embedded sensors such as Accelerometer, Gyroscope, video camera, microphone, GPS, gyroscope, proximity sensor, light sensor etc. The sensors can unobtrusively send time-series data which is analyzed by Pattern mapping, Machine Learning (ML) or Deep Learning (DL) algorithms for activity recognition.

Traditional ML and other “shallow” algorithms needed handcrafted features which limited their growth and compatibility. With the advantage of local dependency, scaling invariance and on-hand learning, the deep learning algorithms are highly capable and efficient in handling time-series signals for features extraction and classification.

Typically, a deep learning model has a hierarchy of multiple layers automatically learning non-linear transformation and feature extraction, each layer using output of previous one as input. They use the already supplied dataset for training purposes and predict the possible future labels. Various researchers have generated enormous number of benchmark datasets for performance evaluation of HAR systems. However, these datasets are mostly person, time and sensor dependent.

The Convolutional Neural Network (CNN) is a DL model which exploits spatial correlations in data to capture relevant features in every time step. This makes them competent enough to recognize and classify activities from both images and time series signals. Long-short-term memory (LSTMs) networks LSTM model process entire sequences of data through feedback connections to classify the time series data.

Over the years, to make them more accurate and adaptable to HAR problem, umpteen variations of DL models have been explored by exploiting different parameters such as invariant distribution of weights , conditional parameterization, size of kernels, length of sliding window etc. in convolutional layers.

Some models tried to improve performance by stacking multiple CNNs or combining CNN-LSTMs. These hybrid models perform better than traditional DL models in certain challenges such as limited training, confusing activities and localization of sensors. Heterogeneous Deep CNN , lightweight CNN, Deep-Bidirectional LSTM, Hierarchical LSTM, EnsemConvNet, DeepConvLSTM and SelfHAR are some of recent promising models. These models have strived to accurately recognize simple activities and distinguish between confusing activities such as walking vs climbing upstairs.

Deep Learning models are highly efficient and accurate in recognizing simple human activity such as walking, standing, sitting. Smartphone-based HAR use these models to track and predict about a person’s lifestyle and health.

However, in real-life, a person executes many more complex activities such as searching under a bed, tying a shoelace or sweeping to name a few. Most of state-of-the-art techniques proposed fail to categorize complex activities, Postural Transitions and have false alarms. Much work is needed in HAR to integrate them reliably in Ambient Assisted Living and elderly care systems.