DN-SLAM: A Visual SLAM With ORB Features and NeRF Mapping in Dynamic Environments

Simultaneous localization and mapping (SLAM) is crucial for robots and other objects in adapting to new environments and localization, a technique for estimating and mapping their position in unfamiliar environments. SLAM systems have a wide range of applications in the fields of computer vision, robotics, augmented reality (AR), and virtual reality (VR).

Traditional vision SLAM often prioritizes localization accuracy, assuming a static environment. However, real-world scenarios frequently involve dynamic elements like pedestrians, hindering the system's ability to track camera positions and construct accurate maps. This poses significant challenges in highly dynamic settings. Additionally, traditional visual SLAM systems often produce sparse maps, limiting their ability to capture detailed information about the environment.

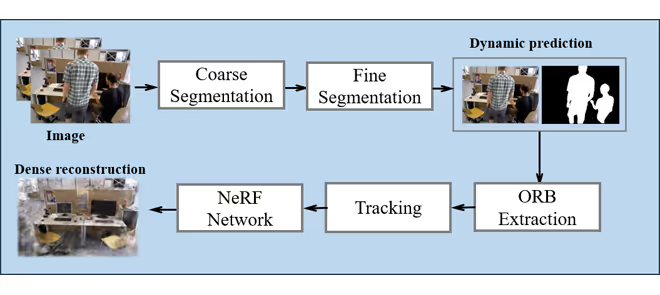

The proposed system, DN-SLAM, combines semantic segmentation and the Segment Anything Model based on ORB-SLAM3 and is specifically designed for dynamic environments. The ORB (Oriented FAST and Rotated BRIEF) feature helps SLAM systems detect landmarks and track movement in images by identifying small, recognizable portions.

In the tracking phase, semantic information is utilized to identify potential moving targets for preliminary segmentation. To achieve greater accuracy in refining dynamic targets, the system integrates optical flow with the Segment Anything Model. This refined process removes feature points associated with dynamic objects, which could negatively impact matching accuracy while retaining the most valuable static feature points for tracking. Integrating optical flow and the Segment Anything Model significantly improves the system's accuracy in high-dynamic environments. Minimizing dynamic targets' influence on the SLAM system's positioning improves accuracy. The information focus was confined to within one pixel of each feature point, thus reducing computational complexity and improving the accuracy of camera position estimation.

At the same time, a new implicit neural representation, NeRF (Neural Radiance Field), is used to generate dense maps. NeRF can generate high-quality renderings with fewer datasets by training the data on a limited number of input views. Unlike traditional reconstruction methods such as point clouds, meshes, and voxels, NeRF employs a continuous function representation that enhances the portrayal of complex scenes. This capability allows for rendering from any angle, resulting in realistic view synthesis.

DN-SLAM combines NeRF and SLAM in a single mapping thread. While the tracking thread is running, the mapping thread performs NeRF rendering. The background is repaired after the dynamic object is eliminated from the scene, generating dense maps based on estimated positions and other information, reflecting the fine density information of the scene.

Upon experimental evaluations against other dynamic SLAM algorithms, DN-SLAM demonstrated superior performance across most of the sequences, with improved reliability of the SLAM algorithm under high dynamics and a localization error margin of only 1 to 3 cm. Notably, its accuracy in dynamic conditions closely matches that observed in static environments, indicating its robustness.

Future research should focus on improving DN-SLAM's performance in dynamic environments with SAM for fine segmentation to enhance its real-time capabilities.