Deep Transfer Learning With Self-Attention for Industry Sensor Fusion Tasks

Industry 4.0 aims to improve manufacturing processes by integrating deep learning models and the Internet of Things. With real-time analysis, automation, predictive maintenance, and self-optimization of process upgrades, smart technologies can completely revolutionize the manufacturing process.

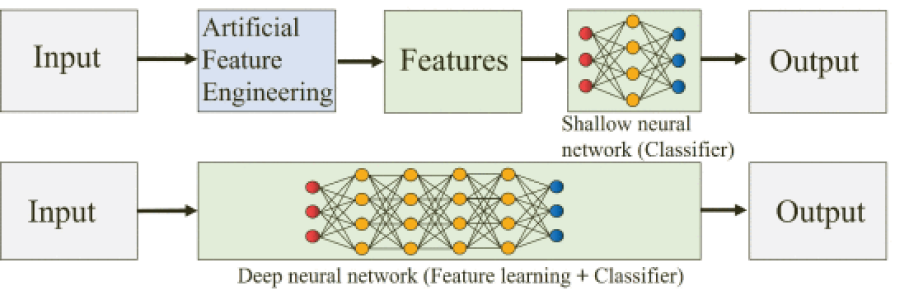

Data in an industrial environment is highly complex, involving different modalities and measuring physical quantities with significantly different sampling rates. Sensor fusion methods typically used to process such complex unstructured data need deeper neural networks with better feature extraction capabilities. It necessitates large training data requirements, increasing overall cost and time. Artificial feature extraction methods, such as time-domain statistical and frequency-domain feature extractions, can reduce training data requirements but require extensive experimentation and understanding of industrial processes.

The proposed transfer learning methodology transfers a deep model ‘Transformer’ from data-rich natural language processing (NLP) to industrial applications. It uses the strong learning ability of deep neural networks and the ease of training of shallow neural networks to improve sensor fusion tasks for industrial applications.

The model comprises several layers, which include data normalization, reorganization, embedding, Generative Pre-Trained Transformer -2 (GPT-2), and a classifier. In NLP tasks, a deep learning model must recognize the meaning of a single word and infer information from a sentence or paragraph. In the proposed transformer, word embedding is replaced with sensor data as input, with different rows representing different sensor data. To maintain the same embedding vector length, high sampling rates require multiple embedding vectors, with some sensor values discarded or data padding used. The embedding layer uses a linear layer to learn mapping rules automatically.

In this model, GPT-2, OpenAI's pre-trained decoder, is used as the feature extraction engine with 1.5 billion parameters and trained on a 40GB non-task-specific training dataset. The feed-forward and multi-head attention layers contain the extracted features from NLP. It freezes 99.9% of GPT2 model parameters from the natural language dataset, fine-tuning only the normalization layer, linear input, and output layer with a 0.001 learning rate to balance training speed and stability.

The embedding layer and classifier dimensions are adjusted to match GPT-2's dimensions (N, 768). Embedding layers are based on dataset dimensions, whereas classifier dimensions are based on task categories. The classification layer is a single-layer feed-forward neural network, with the last position in the output sequence used as input.

The study evaluated the proposed framework on three datasets: a hydraulic system working condition, an electric motor bearing condition, and a gearbox bearing and gear working condition. The method achieved high accuracy without needing feature engineering in a large input space. The model showed the potential to effectively reduce training data requirements for deep learning with high prediction accuracy, saving time and cost of industrial processes. Additionally, the self-attention mechanism of the model can inspect the decision basis and weight information to improve the interpretability of deep learning models. This prediction information and decision-making rationale are beneficial for industrial applications.

The model demonstrated satisfactory classification performance but lacked capacity for regression tasks. Future research could explore finding a balance between model performance and resource consumption.