CAMs-SLAM: Cloud-Based Multisubmap VSLAM for Multisource Asynchronous Sensing of Biped Climbing Robots

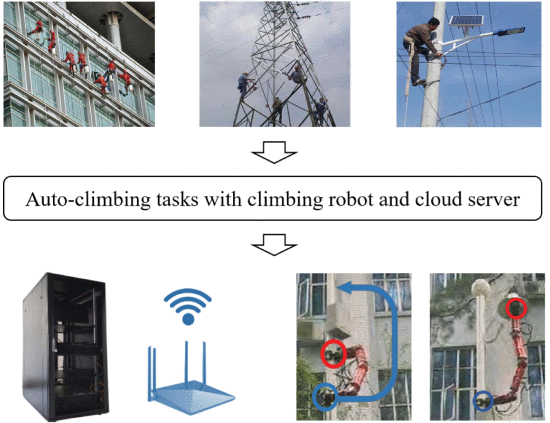

A double-claw climbing robot with the feature of autonomous navigation in climbing sites is an excellent option to replace or assist humans in high-altitude and high-risk operations. Variant types of climbing robot systems have been proposed over the recent decades. Among them, biomimetic Biped Climbing Robots (BiCRs) are widely studied because they can overcome obstacles and efficiently perform transitions.

Generally, the BiCRs have the characteristics of alternate gripping with double claws and alternate work of multiple sensors, so its visual sensing has the characteristics of multi-source asynchronous perception. However, mapping and localization, as a priori of robot climbing operations, require a globally consistent expression rather than a local expression in each sensor coordinate or transient image sequence.

The learning-based VSLAM demonstrates an advanced performance in achieving global consistency and robustness over the traditional model-based methods. However, it is achieved by a high computing cost. Therefore, the cloud-based design can be a way to combine the robustness of learning-based VSLAM and the real-time performance of model-based VSLAM. By introducing cloud-based VSLAM to BiCR systems, BiCRs could benefit from the cloud by moving the high cost of challenging image reconstruction to a cloud server, saving computational resources and improving autonomy.

With the feature of multiple submap VSLAM, the researchers propose a novel cloud-based multi-submap VSLAM system for the asynchronous sensing of BiCRs. This cloud-based asynchronous multisubmap VSLAM (CAMs-SLAM) system not only achieves the final goal of building a globally consistent map for planning autonomous climbing but also opens up exciting possibilities for the future of climbing robot systems. The proposed system can satisfy the multi-source asynchronous sensing of BiCRs in challenging situations and demonstrates a biped climbing robot system with robust visual mapping and localization ability.

Driven by the control command, the climbing robot performs autonomous climbing and uses the sensor at the swinging end for perception. When the motion command asks for the alternation between the two ends, the multi-submap VSLAM cuts off the incremental construction of the current submap at the moment of the alternate gripping and creates a new submap.

If alternate gripping is not required, the visual tracking and mapping in the current submap are kept, and the repeated observation area near the gripping point is used to construct the data association between dual-claw perception.

During the incremental construction of the current submap, the visual tracking may fail intermittently due to the complexity and variability of the climbing work site. However, when incremental tracking and mapping fail, a new submap is created to keep the system running. At the same time, the un-tracked images are uploaded to the cloud for additional submap building. The built cloud map is downloaded to the robot to complement the alignment of submaps. In parallel, the climbing robot maintains real-time exploration and incremental modeling according to the motion control.

A global consistency modeling of the climbing environment that satisfies the multi-source asynchronous perception of the BiCR showing advanced performance is realized with the support of multi-submap environment expression and edge-cloud collaboration.