BabyPose: Real-Time Decoding of Baby’s Non-Verbal Communication Using 2D Video-Based Pose Estimation

Babies need delicate and specialized care in their early years as infants and toddlers. Since babies cannot communicate verbally, it is of utmost importance for new parents to understand their non-verbal forms of communication, such as signs and poses.

Extensive application of Human Pose Estimation (HPE) methods in various fields like video-based surveillance, sports analysis, and medical aid has proven its usefulness. However, this study is the first to estimate baby poses using the HPE method.

Babies express themselves through facial, hands, legs, eyes, eyebrows, and a combination of movements. Each baby develops its own unique set of body language to communicate their feelings and needs. The baby’s body language can be estimated and decoded using sensory input data using 2D video-based pose estimations.

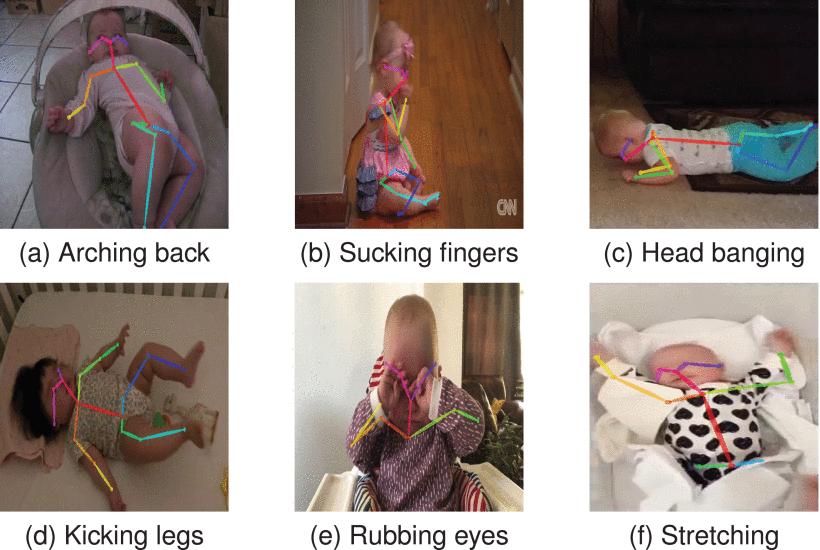

The study mainly focuses on movements that indicate tiredness, hunger, and unhappiness in babies. The researchers created a BabyPose dataset collated from 156 video clips, identifying six main actions in babies - arching back, sucking fingers, kicking legs, head banging, stretching, and rubbing eyes.

Real-time tracking and monitoring of babies through these videos is addressed with DeepSORT. This popular object-tracking algorithm uses the tracking-by-detection method to detect multiple objects in the video frame.

This real-time tracking technique is rooted in advanced artificial neural network frameworks like CNN (Convolutional Neural Network) for recognizing the activities of the baby. Since CNN is better suited to analyzing spatial data elements like images, the researchers paired it with LSTM (Long Short-Term Memory) models to analyze temporal and sequential data elements like videos.

The input video of the babies’ behavior goes through three multi-person human pose estimators: OpenPose, Alpha Pose, and KAPAO.

OpenPose works from the bottom up, identifying and mapping different parts of the baby’s body as keypoints on a single frame. It then renders real-time full-body postures for the babies in the input, generating 25 body key-point data for each video frame.

AlphaPose is another open-source precise estimator that maps the poses corresponding to the same baby across the video frames using an efficient pose tracker called Pose Flow. KAPAO then simultaneously detects these keypoints and combines these detections to predict baby poses as a unified set.

This system deploys a deep-learning based human pose estimation method that works on skeletal key-points. The output creates label-based analysis reports with a 99.83% accuracy that allows us to interpret the behavior of the baby from these non-verbal poses.

The estimator can then be used to accurately predict the body language of babies and understand their desires and wants. Further application of this method can be used in tracing and tracking babies in real time, especially crawlers, and devising safety tools for them.

Pose Estimation for Babies can also find further applications in various fields like parental guidance, health monitoring, and development studies of babies. It will raise overall awareness and understanding of the behavior of babies, benefitting parents who will be better equipped to care for their babies.