Angle-Insensitive Human Motion and Posture Recognition Based on 4D Imaging Radar and Deep Learning Classifiers

Human Activity Recognition (HAR) is an exciting research area that tries to create systems and develop techniques that could automatically detect, recognize and categorize human activities based on the sensor data. This work addresses the challenges of human activity recognition (HAR) in home environments.

HAR is becoming increasingly important to support the aging population. The paper focus on using radar-based HAR, which has advantages for users' compliance over other sensors, such as cameras and wearable devices, since it is more privacy-preserving, contactless, robust to lighting and occlusion.

Micro-Doppler signatures are typically used as one of the main data representations for HAR in conjunction with classification algorithms inspired by deep learning methods. However, one of the limitations of this approach is the challenging classification of movements at unfavorable aspect angles (i.e., those close to 90 degrees with respect to the line of sight of the radar) and the recognition of static postures between continuous sequences of activities.

The paper proposes an approach to overcome these limitations by using millimeter-wave (mm-wave) 4D imaging radars, which can provide additional information such as azimuth and elevation angles besides the conventional range, Doppler, received power, and time features.

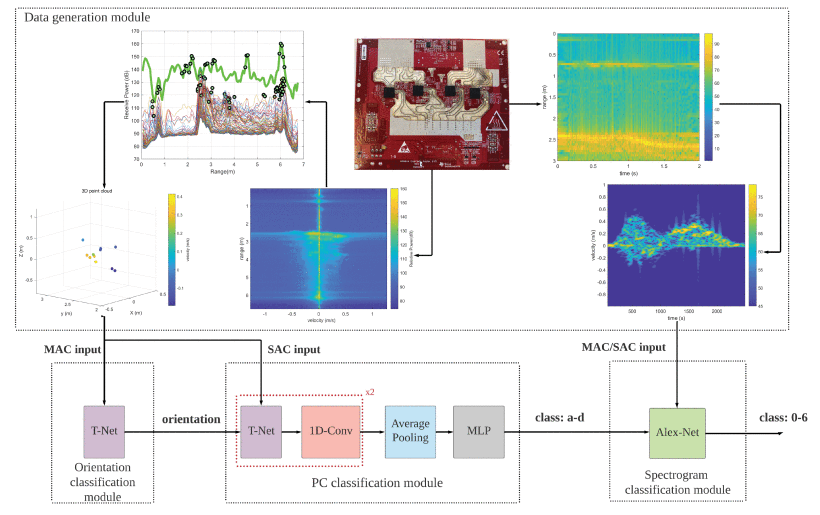

A hierarchical processing and classification pipeline to fully exploit all the information available from mm-wave 4D imaging radars is designed. The proposed pipeline uses the two complementary data representations of Point Cloud (PC) and spectrogram, which capture the spatial and temporal features of the activities, respectively. The pipeline consists of four stages: data acquisition, data preprocessing, feature extraction, and classification.

The data acquisition stage involves using a mm-wave 4D imaging radar to collect the raw data from the scene. The data preprocessing stage involves applying noise reduction, clutter removal, and range alignment techniques to the raw data. The feature extraction stage involves transforming the preprocessed data into PC and spectrogram representations and then extracting relevant features from them. The classification stage involves using two deep learning classifiers, one for each data representation, and combining their outputs to obtain the final activity label.

The performance of the proposed pipeline is evaluated using an experimental dataset with 6 activities performed by 8 participants of different body shapes, weights, and kinematic patterns. The activities include walking, sitting, standing, lying, bending, and squatting. The data collected and information provided regarding range, Doppler, azimuth, elevation, received power, and time are explored.

The proposed pipeline is compared with alternative baseline approaches, such as using only PC or spectrogram data or using different classifiers. The effect of key parameters on the results, such as the amount of training data, signal-to-noise levels, and virtual aperture size, is also investigated. These preliminary results show that the proposed pipeline achieves an average accuracy of 97.8%, which is higher than the alternative baseline approaches available.

There is still scope to improve the pipeline with additional data from more participants with diverse body shapes, sizes, heights, ages, physical characteristics, and different challenging physical activities like falling or other critical activities to support it in a realistic HAR setting.