A Radar-Based Human Activity Recognition Using a Novel 3-D Point Cloud Classifier

Human activity recognition (HAR) has gained prominence in the advancing areas of machine learning, pattern recognition, context awareness, and human body perception. HAR applications typically utilize wearable sensors, radar, radio-frequency signals, or cameras to gather information. However, the existing benchmark datasets fail to provide sufficient algorithm training, thus compromising the recognition accuracy.

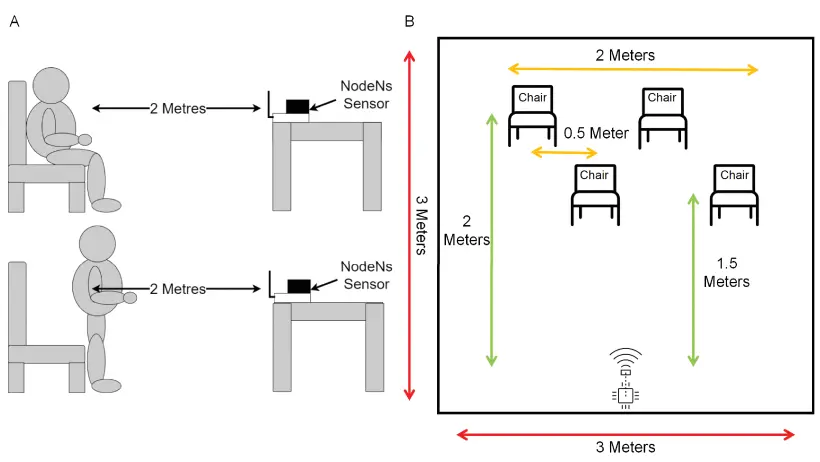

This work uses the NodeNs ZERO 60 GHz IQ radar sensors, which can capture more accessible and straightforward cloud data to capture human movement information. Point cloud data is a vector set containing geometric positions in a spatial coordinates system. The device can scan the area and record data points. Each point contains 3-D coordinates. The number of points is large and forms what is known as clouds, hence the term point cloud data.

The paper contributes a detailed study of contemporary datasets related to human activities to determine the trade-off between recognition accuracy, precision, and resource usage. It explores the state-of-the-art methods related to HAR to develop features to be used by deep learning algorithms. The paper has compiled a comprehensive 3-D cloud point dataset to study daily human activities.

Human movements can be divided into three basic categories: dynamic activities (walking, falling, or bending), static activities (sitting or standing), and posture transitions. Each activity itself can have many variations.

The proposed dataset contains several combinations of activities and subjects to map all variations of further human activities. The dataset is divided into four classes representing samples from one or more subjects. Each sample consists of five different activities: standing, sitting, bending, walking, and falling, performed multiple times by the subject(s). For ease in data normalization, all activities by various subjects were sampled for a locked time duration of 5 seconds. Compiling this data set will help researchers investigate the resilience of deep-learning algorithms in real-world scenarios.

The data was pre-processed for feature extraction using density-based spatial clustering of applications with noise (DBSCAN) to organize the cloud points into clusters. The DBSCAN algorithm defines point cloud categories as sets of the most densely connected points. An advanced deep learning algorithm, Long Term Short Memory (LSTM), then uses these cluster sets extracted by DBSCAN’s as input to the neural network for processing and classifying the data. The LSTM outputs are used to create the benchmark dataset. The dataset is divided into 80% training data and 20% testing data. This ensures the exclusivity of data in the training and testing processes.

Upon evaluation, the results demonstrated that the deep learning algorithm, LSTM, could classify the test data for the various experiments with over 95% accuracy. The proposed benchmark dataset is more suitable for deep-learning algorithm training than the traditional sensor data format. They can gather point cloud data more efficiently than Lidar devices. It is also more accurate and significant for real issues than existing benchmark datasets.

The benchmark dataset can also be used in future case studies to understand better the benefits and challenges of various HAR classification methods for point clouds.