Lock-in Time-of-Flight (ToF) Cameras: A Survey

Time of Flight camera sensor delivers higher frame rate 3-D imaging and provides intensity data and range information for every pixel. Research and progress made in microelectronics, micro-optics, and micro-technology have contributed hugely to the growth of ToF camera sensors. Depth-intensity image matching has aided ToF cameras to stand apart from the other technologies. Efforts are being put into cameras optimization to accelerate usability.

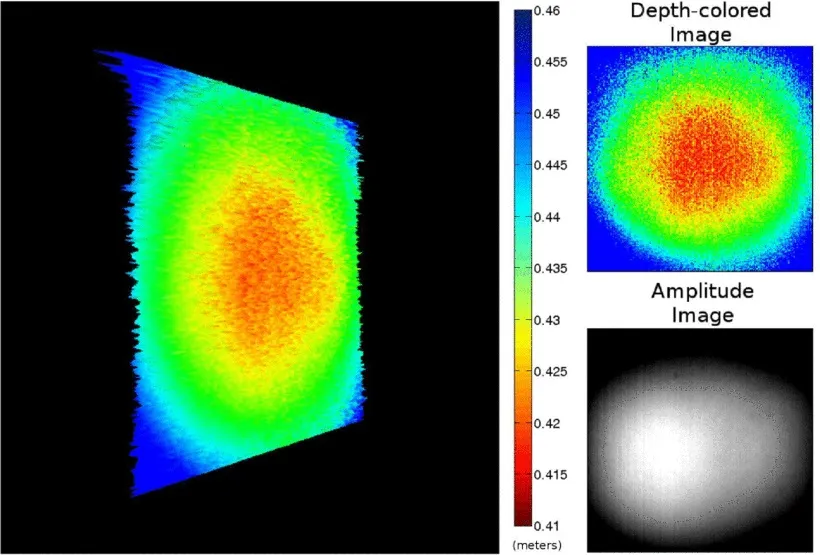

Depth measurements are calculated using the time-of-flight principle by using either pulsed or continuous wave modulation. Applications using pulse-based ToF cameras are scarce as compared to those using continuous-wave modulation and demodulation lock-in pixels. ToF cameras exhibit fascinating properties such as auto-illumination, elimination of mobile parts, compact design, and complete image acquisition at a high frame rate as compared to other technologies obtaining scene depth.

Before the advent of ToF cameras, camera and laser-based systems carried out depth computation, but they had some relative disadvantages. Despite various methods and options available for camera-based systems, they produced ambiguities in the results. They required multiple images, which led to an increase in overall costs. On the contrary, the ToF camera sensor provides more precision in the depth information, requiring only one image. Laser-based systems found their advantages in obtaining a high depth range, producing accurate and reliable outputs, but simultaneously, they required heavy power and additional moving parts. ToF camera sensor solves this issue as it is compact and portable; it requires less power, and image acquisition time is significantly less.

Despite the accuracy obtained through ToF cameras, they still have to deal with systematic and non-systematic errors. Systematic errors can occur due to low/overexposed reflected amplitude, higher temperatures, or different values of integration times. Non-systematic errors can occur due to interference of multiple light reflections captured at each sensor’s pixel, light scattering, or motion blurring. To minimize these errors, calibration techniques are used for systematic errors and filtering techniques are used for non-systematic errors.

Reviewing the applications of ToF camera sensors to date, it can be concluded that the most appreciated feature of it is the capability of this sensor to deliver complete scene depth at a high frame rate without requiring moving parts. They are satisfactorily used in-depth information-based foreground/background segmentation methods. They fit in human environments, are safe to eyes, and do not require physical contact. ToF camera sensors have a good scope in improving precision and acquisition range to get a hold on the 3-D depth acquisition field.