TSSTDet: Transformation-Based 3-D Object Detection via a Spatial Shape Transformer

Autonomous vehicles' safe and efficient operation hinges on their ability to accurately detect, identify, and map 3D objects in the vicinity. Existing 3D detection methods face two key problems: (i)sometimes, parts of objects are missing due to obstacles (like other vehicles) or sensor issues, and (ii)objects often appear in different orientations (rotations), making them harder to identify accurately. Also, traditional detection methods rely on complex neural networks and require a lot of processing power. They also depend on creating large datasets of object orientations and shape completion, which increases the computational cost.

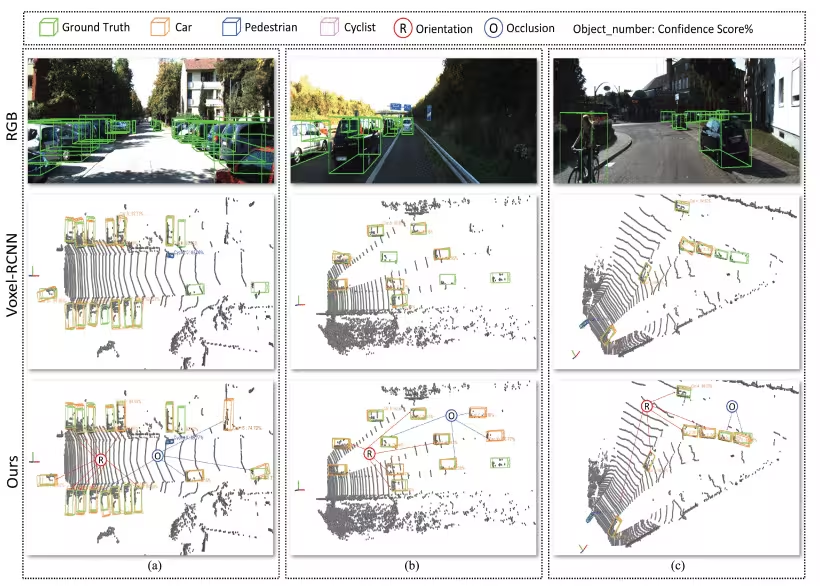

The paper introduces transformation-based 3-D object detection via a spatial shape transformer (TSSTDet) framework, which is designed to handle these challenges. Through a unique transformer-based system, it addresses challenges like incomplete shapes and varied orientations, enhancing object detection performance significantly.

One of the standout features of TSSTDet is its ability to handle objects that appear in different rotations. The system uses a unique processing technique called a rotational transformation convolutional backbone (RTConv), which ensures the object is correctly identified, regardless of its orientation, without using further augmentation data. For example, whether a car is facing you directly or turned sideways, the system will still detect it accurately.

The RTConv encodes LiDAR point clouds to extract transformation-equivariant features. Rotational-Transformation Pooling was used to align and combine scene-level voxel features into bird's-eye view (BEV) feature maps. Finally, a region proposal network (RPN) is used to generate high-quality 3-D proposals.

Often, LiDAR sensors cannot capture the entire object due to obstacles or angles, such as a car partially hidden behind another vehicle. TSSTDet uses a voxel-point shape transformer (VPST) to "fill" the point in the space inside the object by using a deep learning module to predict the full 3D shape of objects, even when parts are missing. The partial object shape was segmented into patches and encoded as volumetric grid space. This led to efficient training of an autoregressive model to reconstruct hidden parts.

TSSTDet applies an attention-fusion and refinement (AFR) module to refine the detection further. This system combines features from different stages of the detection process and highlights the most important details, refining the final output for better accuracy. AFR ensures that the system delivers highly accurate bounding boxes for detected objects.

The proposed approach proved to be effective and adaptable, as evidenced by the experiments on KITTI and Waymo, both benchmarks for 3D object detection in autonomous driving. The highest average precision (AP) recorded was 97.02% for the Car category of BEV object detection criterion on the KITTI validation set. TSSTDet outperformed current state-of-the-art methods, delivering top-tier accuracy in detecting objects like cars and pedestrians. It showed particularly strong results when objects were hard to see, either due to obstacles or challenging rotations.

The proposed novel technique utilizing transformer architecture successfully addresses the challenges of occlusion and object orientation, thus paving the way for advancements in downstream tasks such as tracking and navigation of autonomous vehicles.