Deep Learning Approach for Detecting Work-Related Stress Using Multimodal Signals

Work-related stress threatens mental and physical health, making it crucial to assess them on time to mitigate its adverse effects. The traditional method to evaluate mental stress levels is subjective and time-consuming. This study suggests an accurate and automated mental stress level detection algorithm by adopting a deep learning (DL) approach based on multimodal signals.

Mental stress can be detected by observing physiological signals because mental stress causes changes in the autonomic nervous system, which are reflected in physiological signals. Among various physiological signals, electrocardiogram (ECG), respiration (RESP), and facial images are utilized in the suggested algorithm here. Due to their sensitivity to mental stress, these signals have been adopted in unimodal mental stress detection studies. This study introduces an accurate multimodal mental stress detection algorithm by fusing all these signals at different levels (i.e., feature-level and decision-level).

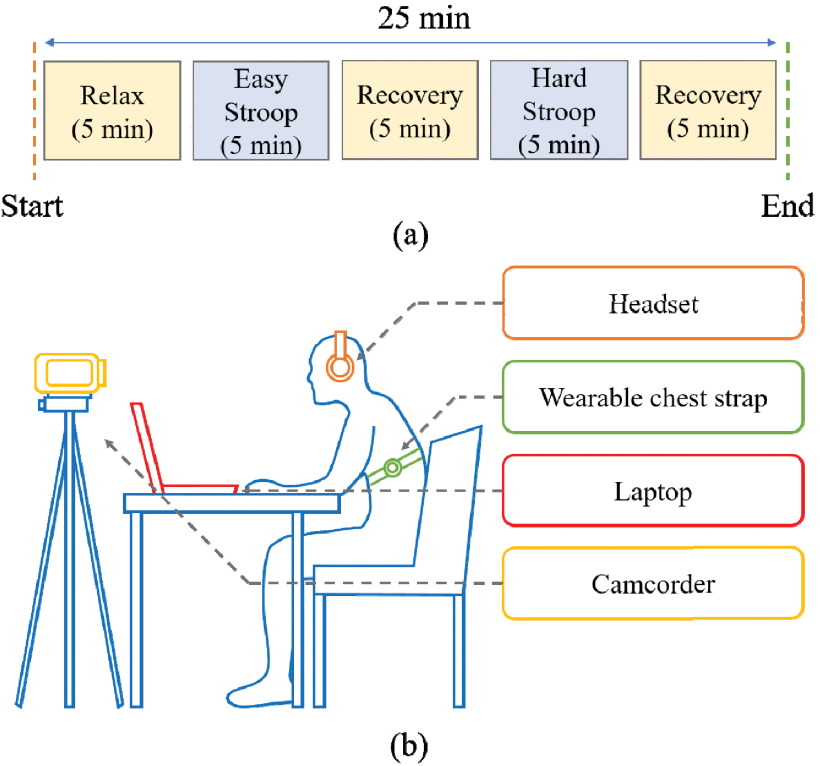

The researchers prepared a proper dataset to train and validate the DL-based mental stress detection algorithm. Through an experiment that mimicked stressful office-like situations, a dataset composed of 823 samples of 10-second-long ECG, RESP, and facial images, with corresponding labels of mental stress levels, was generated. The samples were captured when participants were in relaxation, easy Stroop, and hard Stroop task stages. These were labeled as non-stress, medium, and high stress, respectively. This study evaluated mental stress detection algorithms for binary classification (i.e., non-stress and stress) and multi-class classification (i.e., non-stress, medium stress, and high stress).

The mental stress detection algorithms were based on deep neural networks (DNNs), which featured independent network branches for extracting features from ECG, RESP, and facial images. The feature-level fusion algorithm employed a single decision-making network. In contrast, the decision-level fusion algorithm used multiple decision-making networks and employed a voting method to make final predictions. For the feature-level fusion algorithm, the researchers explored the optimal input condition by varying the combinations of signals.

The mental stress classification performance of the developed algorithms was evaluated through 5-fold cross-validation. In each iteration, datasets from three folds were used for training, a dataset from one-fold was used for validation, and a dataset from the remaining fold was used for testing. As metrics for performance evaluation, the accuracy, area under the receiver operating characteristic curve (AUC), and F1 score were computed. In the case of multi-class classification, both the AUC and F1 scores were macro-averaged.

As a result, the feature-level fusion algorithm that utilized RESP and facial images achieved the best performance in mental stress binary classification, exhibiting 73.3% accuracy, 0.822 AUC, and 0.700 F1 score. In mental stress multi-class classification, the feature-level fusion algorithm that used ECG, RESP, and facial images showed the best performance, with 54.4% accuracy, 0.727 AUC, and 0.508 F1 score.

The proposed algorithms showed excellent performance when compared with conventional machine learning algorithms. This work is the only study to adopt DL-based fusion algorithms to assess work-related stress from multimodal signals. Furthermore, the suggested algorithms were developed based on a multimodal dataset collected from office-like situations using wearable sensors and cameras, enabling adoption in any workplace.