Self-Supervised Monocular Depth Estimation Using Hybrid Transformer Encoder

Depth estimation has significant implications across various fields. Accurate depth estimation is crucial for safe navigation and obstacle avoidance in autonomous driving. In robotics, it aids in navigation and interaction with environments. In augmented and virtual reality, it enhances realism and immersion by accurately mapping virtual objects into real-world spaces.

The camera-based depth estimation systems have recently shown better commercial viability than traditional sensor-based systems. Stereo camera-based depth estimation measures disparity between calibrated cameras, while monocular camera-based depth estimation estimates disparity between adjacent frames using supervised and unsupervised/self-supervised learning-based methods. In recent years, unsupervised learning-based models for monocular camera-based depth estimation have received much attention.

This research paper introduces an innovative approach for depth estimation from single-camera images, utilizing a hybrid transformer encoder-decoder. Transformers have shown great success in various fields, especially in natural language processing, but their application in computer vision, particularly in-depth estimation, is relatively novel. The method is self-supervised, meaning it learns to estimate depth without needing labeled depth maps for training. This is particularly valuable in scenarios where obtaining such labeled data is challenging or impossible.

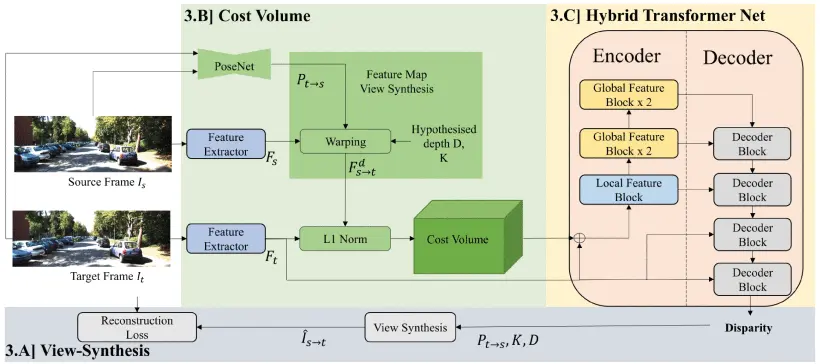

The proposed network first uses a cost-volume structure to find depth. The cost-volume-based depth estimation of unsupervised learning involves extracting feature points from input images and view-synthesizing them based on assumed distance. The cost volume is generated by assessing similarities between source and target feature points.

The novel hybrid transformer encoder network then combines traditional neural network architectures with transformer-based models to convert cost volume into depth. This hybrid approach aims to leverage the strengths of both architectures: the efficiency and established patterns of convolutional neural networks (CNNs) and the attention mechanism and global context understanding of transformers.

The system uses an attention decoder to enhance global feature representation dependency. The decoder improves the resolution of a multiscale feature map by performing self-attention operations on low-resolution features.

One of the critical challenges in monocular depth estimation is the extraction of accurate and meaningful depth information from a single image, which is inherently ambiguous and complex. The proposed method addresses this by enabling the model to focus on relevant features in the image and understand the global context, leading to more accurate depth predictions.

The paper employed several commonly used evaluation metrics to compare the proposed network with other modern networks. Results on the KITTI dataset showed significant improvements: AbsRel reduced to 0.095, SqRel to 0.696, RMSE to 4.317, and accuracy on δ < 1.25 increased to 0.902. The model also reduced parameters and computational demands. However, challenges remain in accurately estimating distance, suggesting future integration of inertial measurement unit (IMU) sensor data or external camera parameters for better depth estimation.

This paper marks a significant step in computer vision, particularly in the application of transformer models to depth estimation tasks. Its self-supervised learning approach and the hybrid architecture offer a promising direction for future research and applications in fields that rely on accurate depth perception from visual inputs.